For quite some time I have been doing TDD and Continuous Integration (CI), both in my private projects as well as my professional ones. My preferred unit testing framework is xUnit and I recently had to set this up with Team Foundation Server (TFS) to do CI. It turned out this was not a trivial task. One think was to get TFS to run the tests, another was to get TFS Reporting to work and show the test results. This blog post will show the different steps I took. I hope it will be an inspiration to others and help them avoid some of the issues and fustrations I ran into. Any comments or feedback will be greatly appreciated.

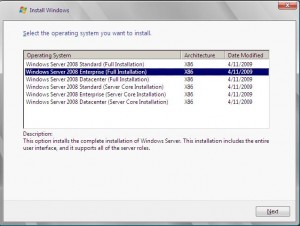

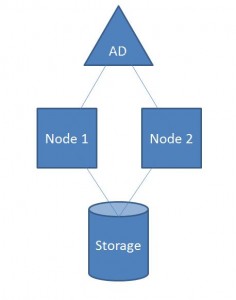

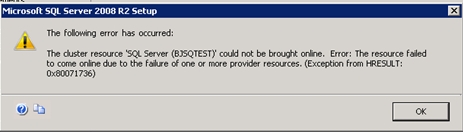

First step is to install TFS 2010. The following will describe how to install it on Windows Server 2008 and SQL Server 2008. However, the steps are the same for Windows Server 2008R2 and SQL Server 2008 R2.

I will not go into great details of how to install the server products. There are already great guides available on the web, so please drop me a mail og reach me on twitter if you require assistance with this part of the process.

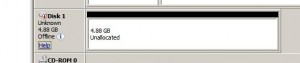

Install Windows Server 2008 (Enterprise) on a server and apply Service Pack 2 (SP2).

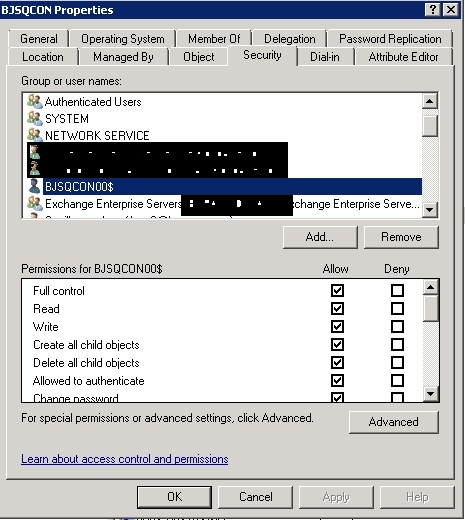

Create a User named, e.g. TFS and add this user to the Administrators group.

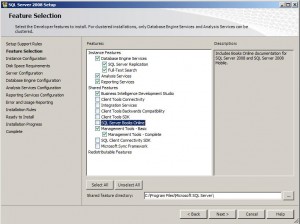

Install SQL Server 2008 (Enterprise Edition) with all components. I’ve also selected to install the Business Intelligence Development Studio, which will allow me to create TFS Reports.

Install Reporting Services in Native mode, but select the option of NOT configuring the Reporting Services now. Do not install it in SharePoint Integrated mode.

Add the TFS user as Login to the SQL Server just installed.

Install WSS (Windows Sharepoint Services) 3.0 and Service Pack 2. Do not run the Configuration Wizard at the end.

At this point you should be able to see the default SharePoint site at http://localhost.

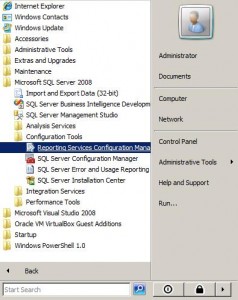

Open the SQL Server Reporting Services Configuration Tool and configure the Reporting Services now.

The tool will take you through the configuration step by step and will allow you to specify User, Create database etc. When asked for user credentials specify the User created earlier.

When configuring the Database keep the default setting to Native Mode when asked for Report Server Mode.

When the configuration has completed it should be possible to see the Reporting Service Portal and Web Service, e.g. on http://localhost/Reports and http://localhost/ReportServer.

When the configuration has completed it should be possible to see the Reporting Service Portal and Web Service, e.g. on http://localhost/Reports and http://localhost/ReportServer.

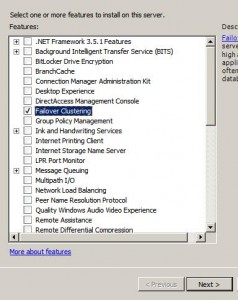

Now it is time to install TFS. Initiate the installation and select the features you wish to install, most likely all but the Team Foundation Server Proxy.

You properly need to restart the server during the installation – after all this is Microsoft – but otherwise the installation should run smoothly.

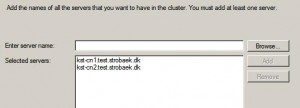

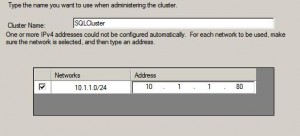

Once done, press the Configure buton, to launch the Configuration Tool. Select the configuration appropriate to your needs. I selected the Standard configuration for this lab.

When asked for the Service Account, enter the user you created previously.

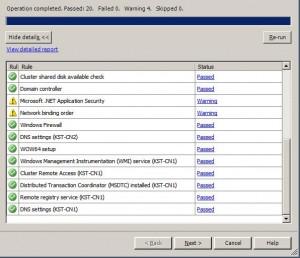

If you are not able to pass all the verification checks, just correct then and re-run the verification. Once all is green, you should be able to complete the configuration.

If you want to read more about TFS, check out the blog posts by Richard Banks and Ewald Hofman.

You now have a running TFS, but we are far from finished. To finish what we set out to do we now have to create and modify a build configuration.

A disclaimer: the following example will have some hardcoded values. The correct way to do things would be to create a custom action, put it in TFS and refer this, but this I will leave

for another post.

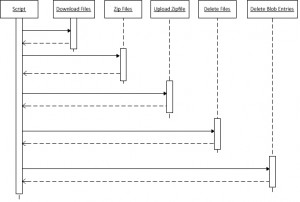

In order to run our unit tests using xUnit and publish the results, we need to copy to items to the TFS server: xUnit and NUnitTFS.

I placed then in C:\Tools\. Do not put any spaces in the folder names; this will make your life easier later on.

A little tweaking is most likely required of the configuration of NUnit. You need to ensure that the client endpoints are set correctly in the config-file. By default they point to http://teamfoundation.

First step is to create a copy of the DefaultTemplate.xaml file, rename it to something – I called mine xUnitTemplate.xaml – and add it to TFS.

Open the new template in Visual Studio.

We need two arguments, one for the path to the xUnit console and one for the path to the NUnitTFS.exe.

Select Arguments and click Create Argument.

Create the two arguments: xUnit and TFSPublish.

Click the Arguments to close the list.

Select Variables and create one called XUnitResult. This will contain the output from one of the new processes we create.

Note the Variable Type and Scope.

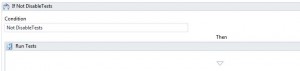

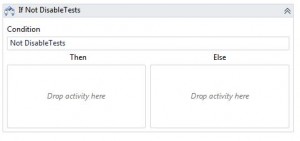

Now scroll down into the processes and locate the If Statement called If Not Disable Tests and the Sequence called Run Tests.

We want to delete the content of the Run Tests sequence and enter our own. Easiest way to do this is right click on Run Tests and select Delete. This will leave you with something like the following

Now from the Toolbox find the Sequence (located under Control Flow) and drop one on the Then box. Rename it to Run Tests.

Add an Invoke Process to the Sequence and name it Invoke xUnit Console.

The reason for the red exclamation marks is that we need to configure the new control. Open the properties and set the following:

- Arguments: “xUnit.Test.dll /silent /nunit results.xml” (including quotes)

- FileName: xUnit

- Result: XUnitResult

- WorkingDirectory: outputDirectory

As you can see we here have a hard coded value, namely the name of the assembly containing the unit tests. “It can be easily seen” as we used to say at University, when the math proof was too easy, so I will leave this as a small home assignment to you, my dear reader 🙂

You also want to add two activities to output the build messages and build errors (if any).

In the properties, set the Message to stdOutput and errOutput respectively.

We are almost done. Add a second Invoke Process under the newly created and name it e.g. Publish xUnit Results.

Set the Argument to the following:

String.Format(“-n {0} -t {1} -p “”{2}”” -f {3} -b “”{4}”” “,

“results.xml”,

BuildDetail.TeamProject,

BuildSettings.PlatformConfigurations(0).Platform,

BuildSettings.PlatformConfigurations(0).Configuration,

BuildDetail.BuildNumber)

And the FileName to TFSPublish and WorkingDirectory to outputDirectory and lastly add two output handlers as above.

Save the template and check it in.

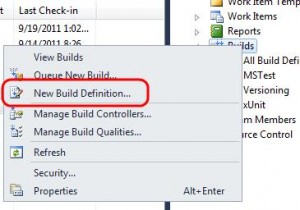

Next step is to create a new build definition that uses the new template.

Open the Team Explorer, right click on Builds and select New Build Definition.

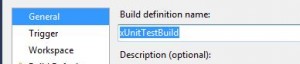

Set the name to something meaningful

And the Trigger to Continuous Integration

In Workspace, ensure that the folders you wish to include are active. Here we only got one.

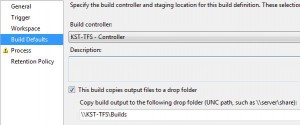

Under Build Defaults set the Build controller and the drop folder. For this test I have just shared a drive on my TFS server.

Under Process we need to set the Build process template. Click on Show Details to expand the panel.

Click on New to set the new template we have created earlier.

Select the Select an existing XAML file and click on Browse…

Select the template we just created and press OK.

Press OK again to get back to the initial screen (still under Process).

Under 4. Misc you should see the two arguments we created. Enter the values as shown below.

If you are still seeing a yellow exclamation mark, it may be because you have to set the Configurations to build under 1. Required | Items to Build.

To test that this is actually working, create a solution and add a class library to hold your unit tests. Remember to name the library xUnit.Test (or rather ensure that the assembly is named xUnit.Test.dll).

When you check in the test, the new build should commence.

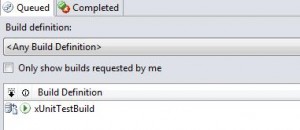

And when done the result is shown:

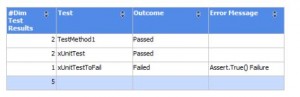

I made two small tests: one that would pass and one that would not.

If you create a TFS report looking at the test runs (using ReportBuilder), you can see something like the following:

We are done!

A few things to note:

- To get TFS to compile and run the tests using xUnit, I create a solution folder (e.g. called Lib) on the same level as my projects and add all the xUnit files to this folder. I then reference xunit.dll from this folder.

- NUnitTFS seems to have changed from the Alpha version, where it was possible to give an argument “-v”. This is not longer possible with newer versions.

- Be sure that the process running the build has access to the drop location.